We get the same number as before and you can also see that we are using a V100 GPU with 16GB of memory. | GPU GI CI PID Type Process name GPU Memory | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. In addition to the Deep Learning Examples, NVIDIA NGC includes containerized resources for frameworks and applications, as well as pretrained models, Helm charts, and scripts.+-+ Deep learning and HPC require a full-stack platform approach. With its TF32 precision, as well as other features like MIG and accelerated structural sparsity, it propels GPU-accelerated computing into the next decade of cloud GPU computing on every major CSP. Get started with TF32 todayĪ100 arrives in the market a decade after the first GPU instance went live in the cloud.

Linear solvers use algorithms with repetitive matrix-math calculations and are found in a wide range of fields such as earth science, fluid dynamics, healthcare, material science, nuclear energy, and oil and gas exploration. TF32 is also supported in CuBLAS (basic linear algebra) and CuTensor (tensor primitives).įor HPC applications, CuSolver, a GPU-accelerated linear solver, can take advantage of TF32. Both the TensorFlow and PyTorch deep learning frameworks now natively support TF32 and are available on NGC. It’s the default precision in the cuDNN library, which accelerates key math operations for neural networks. NVIDIA makes it easy for you to take advantage of TF32. For more information about performance data, see NVIDIA Data Center Deep Learning Product Performance. All told, we saw an average TTS speedup of 2.6x across these networks. In addition to the networks shown in the chart, we evaluated data across 23 different networks from the Deep Learning Examples on GitHub. For DLRM, a recommender system network created by Facebook, there was a ~3x TTS speedup. For computer vision networks, the TTS speedup was ~2.5x. Electra outperforms existing techniques, given the same compute budget on a wide array of NLP tasks. You might notice that NVIDIA included a network called ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately), which is a novel pretraining method for language representations. The biggest speedup seen was on BERT natural language processing (NLP) networks, where TF32 brought a 5x TTS speedup. Using TF32 precision, the A100 delivers significant speedups for computer vision, speech, and language, as well as recommender system networks. If a network doesn’t converge, the training run never completes, which is why looking only at throughput gives an incomplete performance picture. A network’s training run is complete when a stopping criterion is reached, such as the percentage of incremental accuracy improvement, or after finishing a set number of iterations, also known as epochs.

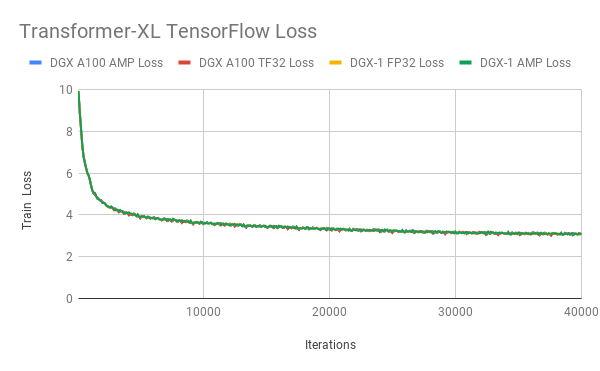

The chart shows time to solution, which is the critical metric when evaluating training performance. Here’s a look at the TTS speedups that TF32 can deliver across different networks, running in an 8-GPU server configuration. If you’re in the early stages of architecting your neural network, faster training times means completing the model construction faster, speeding time to a deployed application. Some modern AI applications are retraining networks multiple times per day. These gains enable applications to be trained faster and more often.

TF32 VS PRAAT CODE

These speedups come with zero code changes and induce virtually no accuracy loss, so that networks converge more quickly. What you see is time-to-solution (TTS) speedups ranging from 2x to over 5x. Accelerated training across use casesĬompare training performance between A100 TF32 precision and the previous generation V100 FP32.

For more information about TF32 mechanics, see TensorFloat-32 in the A100 GPU Accelerates AI Training, HPC up to 20x. Employing automatic mixed precision (AMP), you can double performance with just a few lines of code. TF32 strikes a balance, because it has the same range as FP32 and enough bits to deliver AI training’s required precision without using so many bits that it slows processing and bloats memory.įor maximum performance, the A100 also has enhanced 16-bit math capabilities, supporting both FP16 and Bfloat16 (BF16) at double the rate of TF32. The exponent expresses the range of the number, while the mantissa expresses its precision. Floating-point data represents decimal numbers such as 3.14 in hardware using a sign bit (positive or negative number), exponent (number to the left of the decimal point), and mantissa (number to the right of the decimal point).

0 kommentar(er)

0 kommentar(er)